We have all been there. You are a newly hired employee or intern, and it is your first day. Naturally, you have many questions going through your head about company policies, laptop setup, and other company logistics. Or maybe you are not a new hire, and like most humans, you don’t know everything (if you do, I am envious). For smaller questions, you might feel as though they are not important enough to interrupt someone’s deep concentration on complicated engineering tasks or important business decisions. The question will eventually answer itself…surely. Creating a chatbot could be a valuable resource in such situations, providing immediate answers to common questions and guidance on company logistics, making the onboarding process smoother and more efficient.

That is where a chatbot swoops in to save the day. Its job is to provide complete and consistent answers to most HR, policy, and other related work questions. No longer do you have to send short, rapid-fire questions to a colleague or supervisor – send it to the bot! Don’t worry, their only job is to answer your questions, so they are glad you asked. Conveniently, it has a nice home in our Slack workspace, too, so it is always one click or mention away.

Watson API

To get the chatbot up and running for the employees of Mission Data, there needs to be some brain power that can discern what answers should be given to your beautifully-worded-and-definitely-not-vague questions that contain no typos whatsoever. This is achieved with IBM’s extremely competent Watson API. Watson will take your question, figure out how to categorize it, run through the flow of predefined conversation paths, and give you the best answer it can. This is possible thanks to the techniques of natural language processing (NLP).

NLP utilizes deep neural networks and statistical methods to analyze natural language and recognize the linguistic nuances and context that make up modern speech. Artificial neural networks attempt to model how biological brains work using weighted associations between inputs and computed outputs. Similar to how humans learn to process information, a neural network can be trained with examples to build those weighted associations. Once the neural network is trained, it can utilize its weighted associations to compute educated responses. One of the techniques used for a deep neural network-approach is called word embedding. Word embedding places words in a vector space so that relative proximity in the vector space corresponds to similarity of meaning.

Watson was originally developed to compete on the TV show Jeopardy!. Watson played against two of Jeopardy!’s best human contestants, Brad Rutter and Ken Jennings (a wonderful video by IBM Research: 📹 Watson and the Jeopardy! Challenge). Spoiler alert, Watson won thanks to its sophisticated artificial intelligence and natural language processing abilities. The computer’s Jeopardy! win was a realization that Watson’s neural prowess could be used for other non-gameshow uses, such as making healthcare utilization management decisions for lung cancer.

How does our chatbot using the Watson API know how and what to train on though? Firstly, a new Watson Assistant instance is needed. You can then give the Assistant some skills so it can do specific tasks. The type of skill we care about is a dialogue skill, which will allow Watson to take user questions or statements and figure out how to respond accordingly or perform associated actions. Within a dialogue skill, there are a few key elements: intents, entities, and dialogue. Together, the three work to understand user input, determine how to interpret it, and settle on an appropriate response.

Intents

Intents are important as they interpret and categorize user input. In other words, they are used to determine the intent of a user’s question. You can think of intents as topics of dialogue. For example, suppose we create an intent called #Weather_Info (intents are always prefixed with a ‘#’). We want the bot to identify any weather-related question or statement made by the user as this intent. To do so, we provide the intent with examples of dialogue that a user might input about the weather. The examples could look something like this:

- “What is the weather like today?”

- “Is it rainy right now?”

- “Is it going to be sonny all dey?” (Including typos can be beneficial since they are inevitable with user input)

Watson then uses natural language processing and machine learning techniques to learn the examples and train itself. As mentioned earlier, this is done by creating weighted associations between inputs and outputs with examples to make educated decisions for non-defined inputs later. Once trained, Watson can determine the #Weather_Info intent in similarly phrased or related user inputs. Thus, if a user asks a question such as, “Is it currently rainy?,” Watson recognizes the topic of weather and correctly determines the #Weather_Info intent of the user. When creating the intent it is important to insert at least five unique examples, as the more examples Watson has to train with, the better it is at understanding a user’s intention. The training examples can be inserted manually or imported from CSV files.

Watson Assistant provides some common intents (with examples) for us under its “Content Catalog”. I like to use the pre-provided #General intents to recognize casual conversation by the user, as it makes my bot seem more friendly. We can easily add these intents to our skill’s intents list with the “Add to skill” button.

Classifying user input as intents helps Watson decide which responses to return to the user, which is where entities and dialogues come into play.

Entities

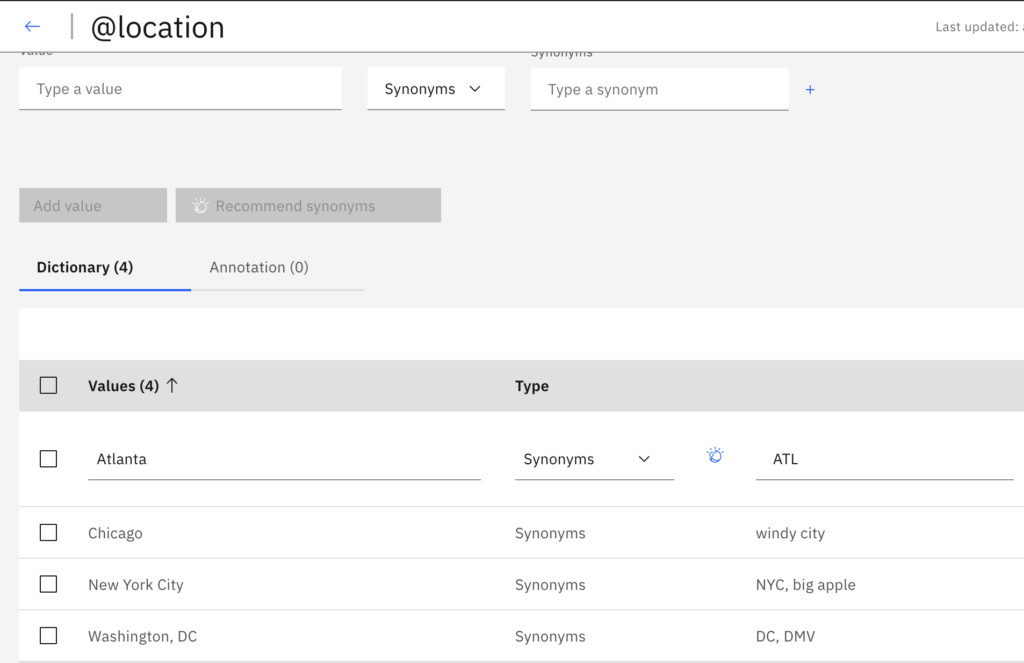

Entities, prefixed with an ‘@’ character, are similar to categories like intents, but entities are more concrete. Entities are used to grab the specifics of a user’s question, such as particular @locations (e.g. Chicago), @names (e.g. School of Engineering), or @holidays (e.g. Christmas). Grabbing details from a user’s input allows the assistant to return less generalized answers, making the chatbot seem more intelligent.

An entity, take @location for example, will have a dictionary of values corresponding to itself. The dictionary might include values such as “Atlanta”, “New York City”, and “Chicago”. If a user asked “what is the weather in Chicago right now?” then Watson would recognize a @location entity equivalent to “Chicago”. Shorthand for this equivalency is @location:Chicago. Combining intents and entities, Watson is now able to recognize that a question like “how is the weather in New York City” has the intent (category) of #Weather_Info with an entity (specific instance) of @location:(New York City).

Like intents, Watson Assistant provides us with common, predefined entities that may be added to our skill. You can find them in the “System Entities” tab and include one by toggling on its “Status” switch.

Dialogue

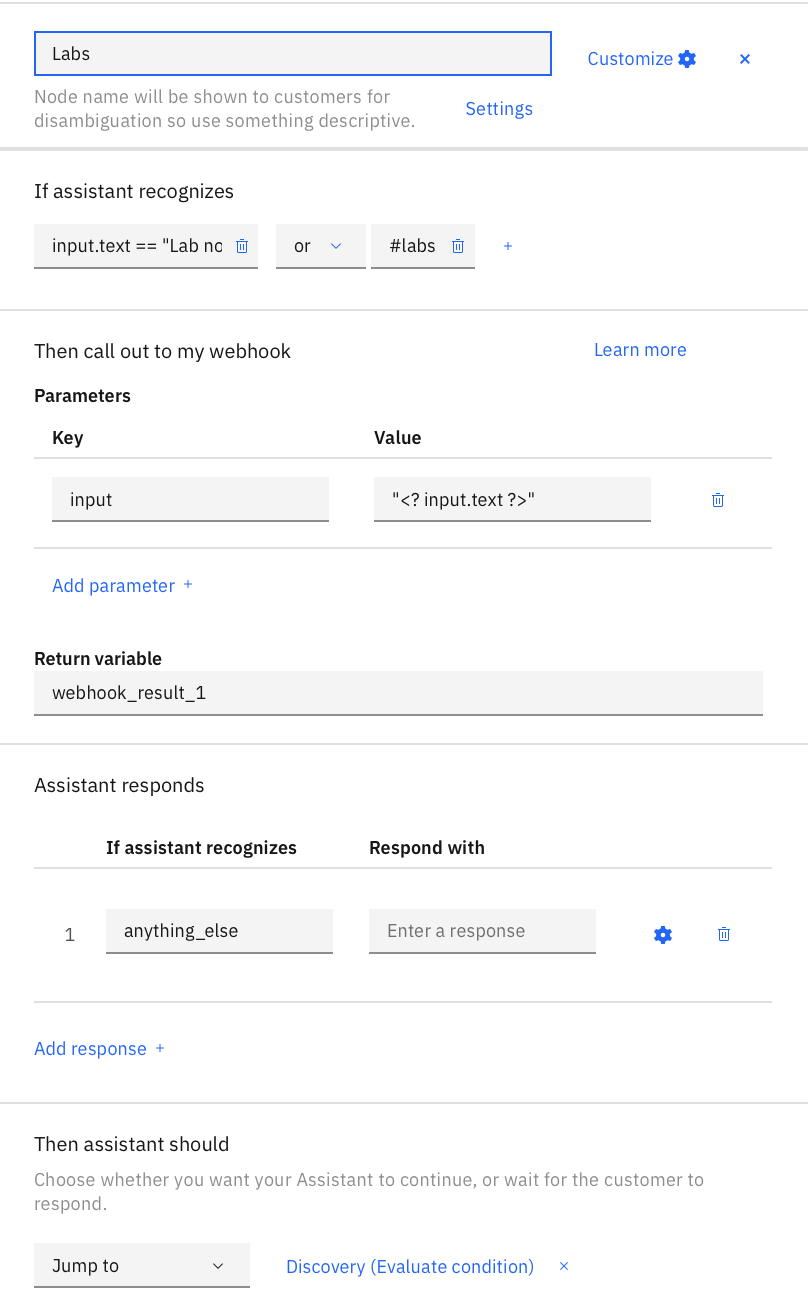

Intents and Entities are great and all, but what happens after Watson identifies what the user is asking? At this point, Watson Assistant is as useful as someone who can understand French but cannot speak it. This is where dialogue plays a role. Watson Assistant’s dialogue consists of a tree of dialogue nodes. Each node has a condition that causes it to be activated. Conditions can consist of intents, entities, context variables (we’ll explain these soon), or combinations of the three with boolean logic, exactly like a conditional statement in a traditional programming language. With the identified entities and intents, Watson will sequentially check its tree of dialogue nodes to see if any recognize the identified intents and entities. If a node “recognizes” the identified intents and entities (through conditions), i.e. a node’s condition is satisfied, then Watson will perform the node’s action(s).

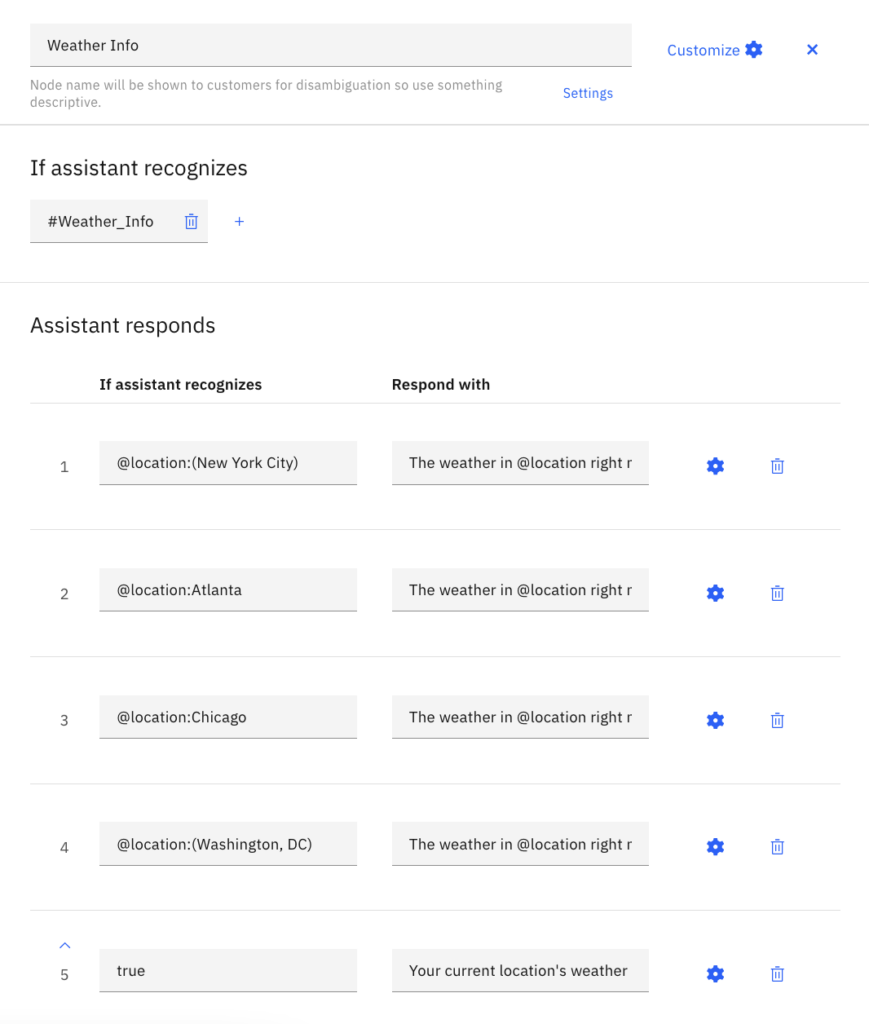

A node may contain one or more actions. Actions can include responding to the user with a message or image, calling out to a webhook for more complicated functionality, or jumping to another node, among other things. If a node has one action, the action will be performed as soon as the node’s condition is satisfied. If a node has multiple actions, each action will have a corresponding condition within the node. These conditions are also made up of entities, context variables, or other recognized intents. To allow for multiple actions, we enable a tool called multiple conditioned response (MCR). Each action’s condition is checked sequentially, and the first to be satisfied is activated. Say you have a dialogue node that responds to questions about the weather for a given location, but you also want the dialogue node to default to the user’s current location if no location entity is given. With MCRs, you would be able to recognize the intent of #Weather_Info to activate the node, and then have two conditioned responses:

- A response that recognizes an entity of @location for when the user provides a specific location.

- A fallback response that has a condition of true and pulls the weather for the user’s current location.

Without MCRs you would need two nodes with two different conditional statements to achieve the same logic as the above example. MCRs allow you to condense more complicated conditional logic into one dialogue node. In short, through the use of dialogue nodes, Watson can converse with a user by giving it a single action or multiple actions to perform upon a specified input.

Putting intents, entities, and dialogues all together, in the example in which the user asks, “What is the weather in Chicago right now?” Watson identifies the question under the #weather intent with a @location:Chicago entity. Then if it exists, a node with the condition of #weather and @location:Chicago is recognized, and its actions are performed. The action may be a response of “It is rainy in Chicago! Be sure to grab an umbrella before heading out.” or an image or gif of a raincloud.

Something to note is by default, Watson Assistant provides two dialogue nodes for us when we create a skill. These are the Welcome and Anything Else nodes. The Welcome node (at the top of the tree) is called the first time a user chats with the bot (without waiting on user input), so its responses generally involve a snippet about the bot’s intentions and abilities to assist the user. The Anything Else node is at the bottom of the tree and is used to respond to input that can not be recognized by the nodes above it. This allows the bot to handle any given input, so its responses typically ask the user to rephrase their question or ask to receive a different question altogether. Because the responses of the Welcome node introduces the bot and the responses of the Anything else node are for fallback inputs, any node created by us belongs in between these two predefined nodes.

Context Variables and Slots

One issue we have not yet discussed is how to get Watson to remember bits of information for use over an extended period. As of now, Watson will forget any recognized information such as intents and entities as soon as a new user input comes for processing, i.e. Watson loses context at each new user input.

Earlier, we briefly mentioned context variables in the dialogue section which, as you might figure from its name, gives Watson context across multiple user inputs. Context variables are prefixed with a ‘$’ character and can hold information such as text or numbers. They are extremely handy if you wanted Watson to have continued conversation about a topic like so:

“Hey Watson, what is the weather in Seattle right now?”

“It is currently 99 degrees F in Seattle right now.”

“What about on Tuesday?”

“There is an average of 89 degrees F in Seattle on Tuesday.”

In that example, you could store the asked-about city in a context variable (Seattle) so that if the user continues questioning about Seattle weather Watson isn’t utterly lost. Without storing Seattle in a context variable after the first question, Watson would have no idea how to respond to “What about on Tuesday?”.

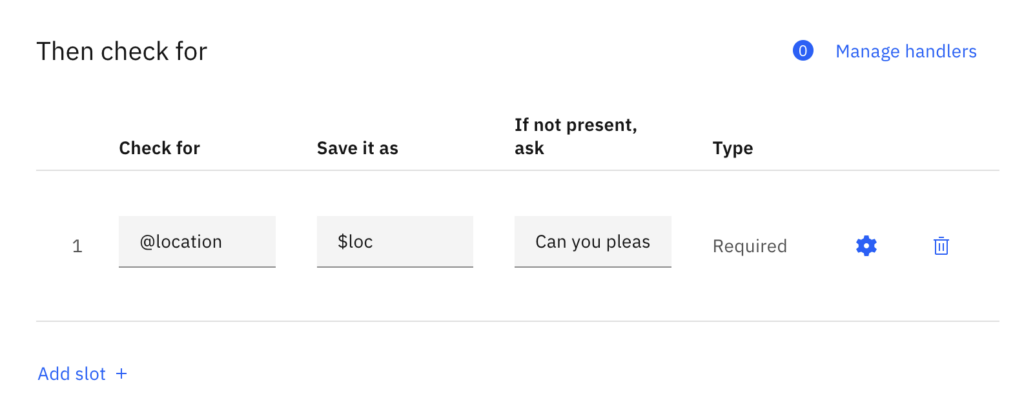

But how can we save recognized information into context variables? Slots allow us to take a recognized intent, entity, or another context variable and save it into a new context variable for use. Additionally, Watson allows us to require the checked intent, entity, or context variable, and if it is not present, then ask the user until they provide it. This is rather useful if you have a situation that needs to be escalated to a human assistant when the chatbot is not capable of providing a response. Context variables can be handed off to the human assistant to provide, well, context of the escalation.

In the next article in this series, we will look at how to incorporate advanced search features into a chatbot using Watson Discovery, and how to take a chatbot implemented with Watson Assistant and deploy it in a Slack workspace via Slack’s API.

Did you find this topic interesting and have a project in mind? Let’s talk: www.growthaccelerationpartners.com/get-started.